Software development now costs less than than the wage of a minimum wage worker

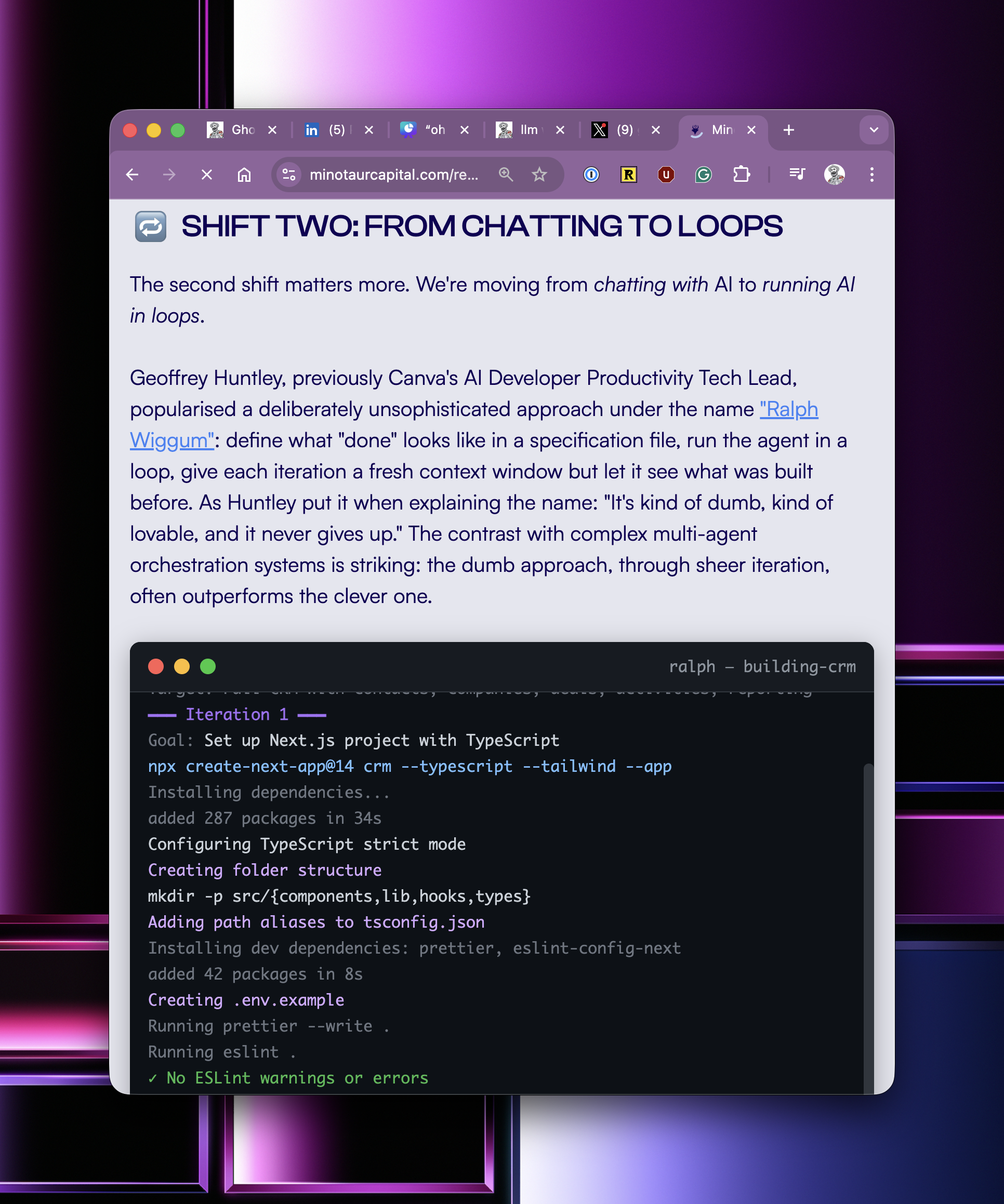

Hey folks, the last year I've been pondering about this and doing game theory around the discovery of Ralph, how good the models are getting and how that's going to intersect with society. What follows is a cold, stark write-up of how I think it's going to go down.

The financial impacts are already unfolding. Back when Ralph started to go really viral, there was a private equity firm that was previously long on Atlassian and went deliberately short on Atlassian because of Ralph. In the last couple of days, they released their new investor report, and they made absolute bank.

I discovered Ralph almost a year ago today, and when I made that discovery, I sat on it for a while and focused on education and teaching juniors to pay attention and just writing prolifically, just writing and doing keynotes internationally, pleading with people to pay attention and to invest in themselves.

It's now one year later, and the cost of software development is $10.42 an hour, which is less than minimum wage and a burger flipper at macca's gets paid more than that. What does it mean to be a software developer when everyone in the world can develop software? Just two nights ago, I was at a Cursor meetup, and nearly everyone in the room was not a software developer, showing off their latest and greatest creations.

Well, they just became software developers because Cursor enabled them to become one. You see, the knowledge and skill of being a software developer has been commoditised. If everyone can be a software developer, what does that mean if your identity function is that you're a software developer and you write software for a living?

My theory of how it all goes down and gets feral really, really fast. Is quite simple...

For the past month, I've been catching up with venture capitalists in Australia and San Francisco and rubber-ducking this concept. You see, for a lot of them, they're not even sure whether their business model as venture capitalists still exists.

Why does someone need to raise a large amount of capital if it's just five man show now?

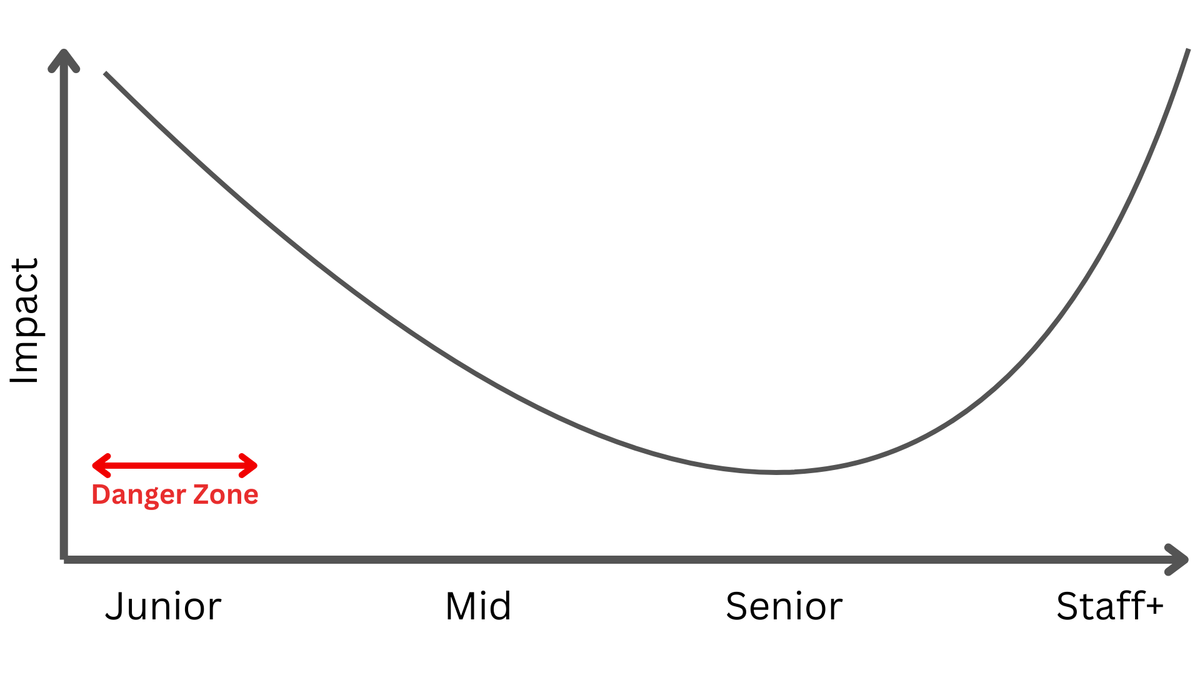

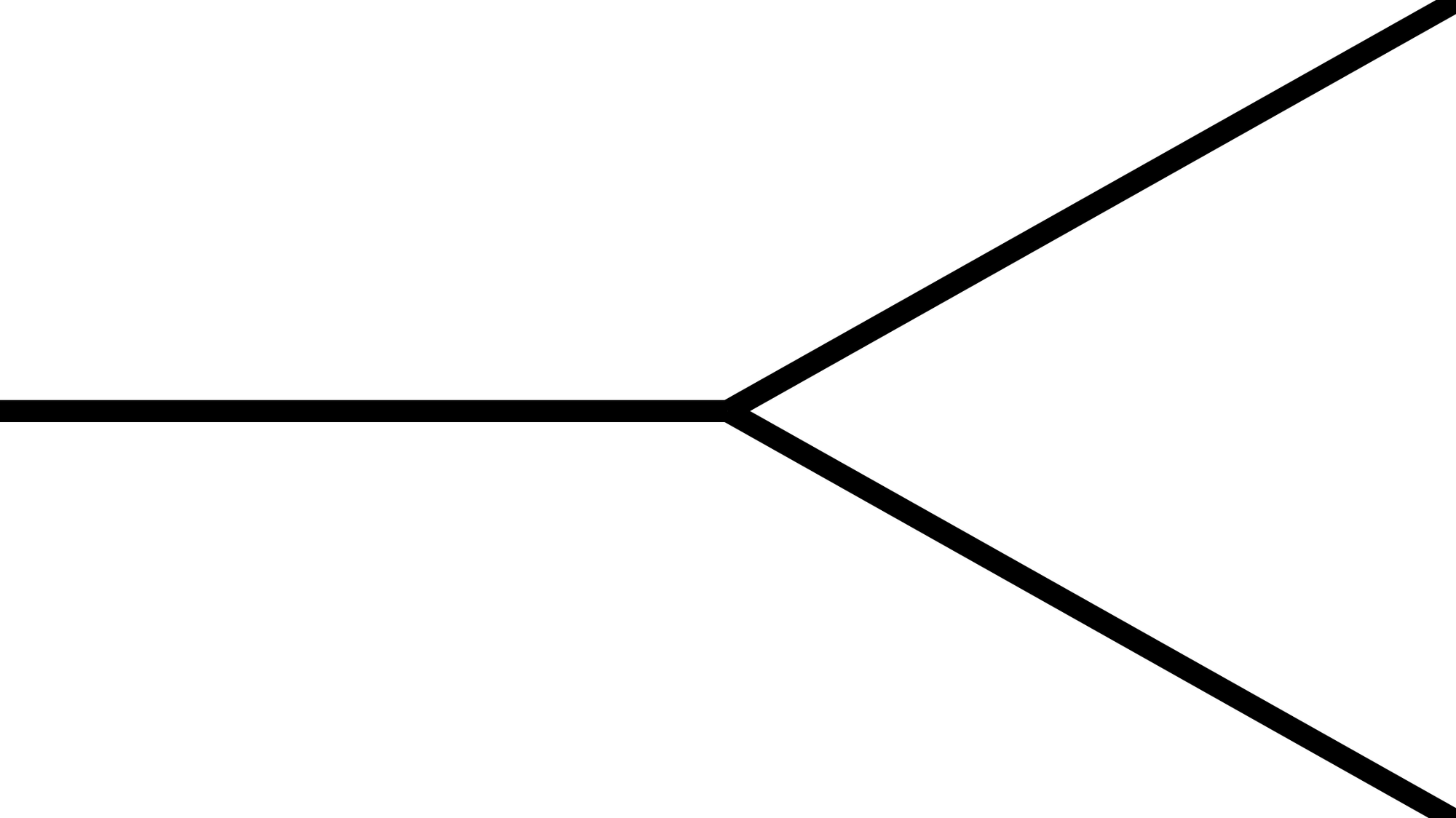

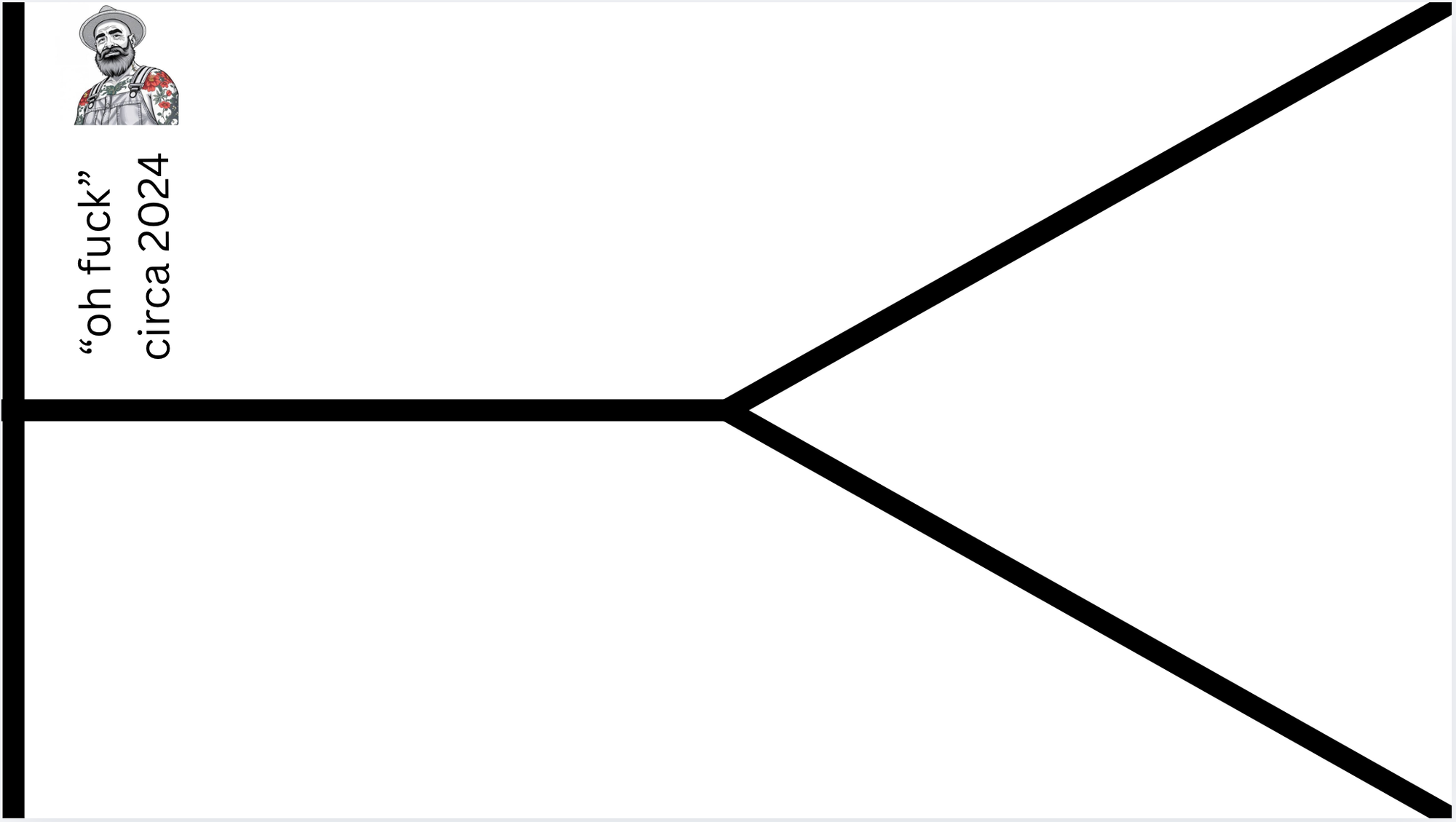

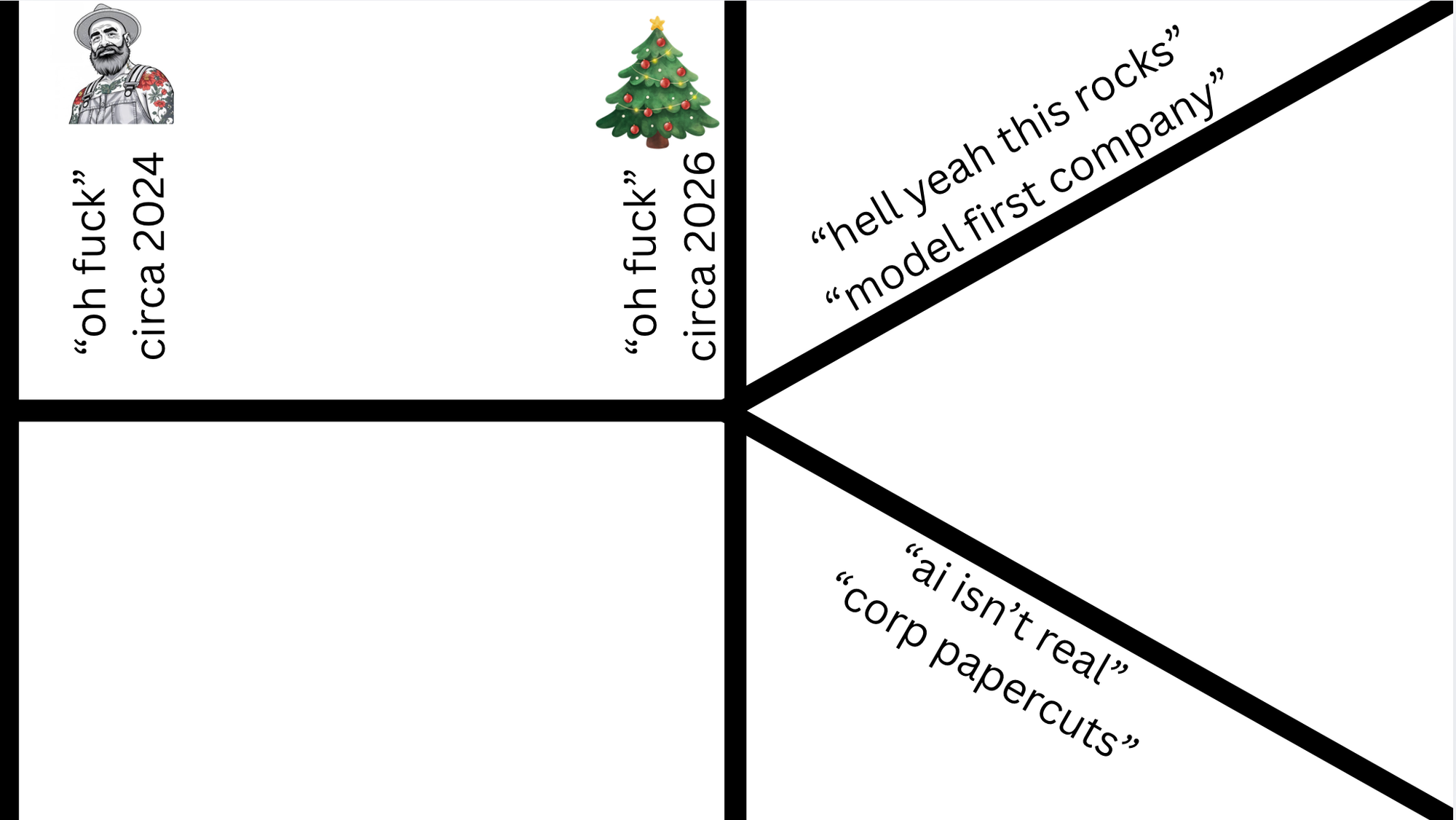

So let's open up with a classic K shape.

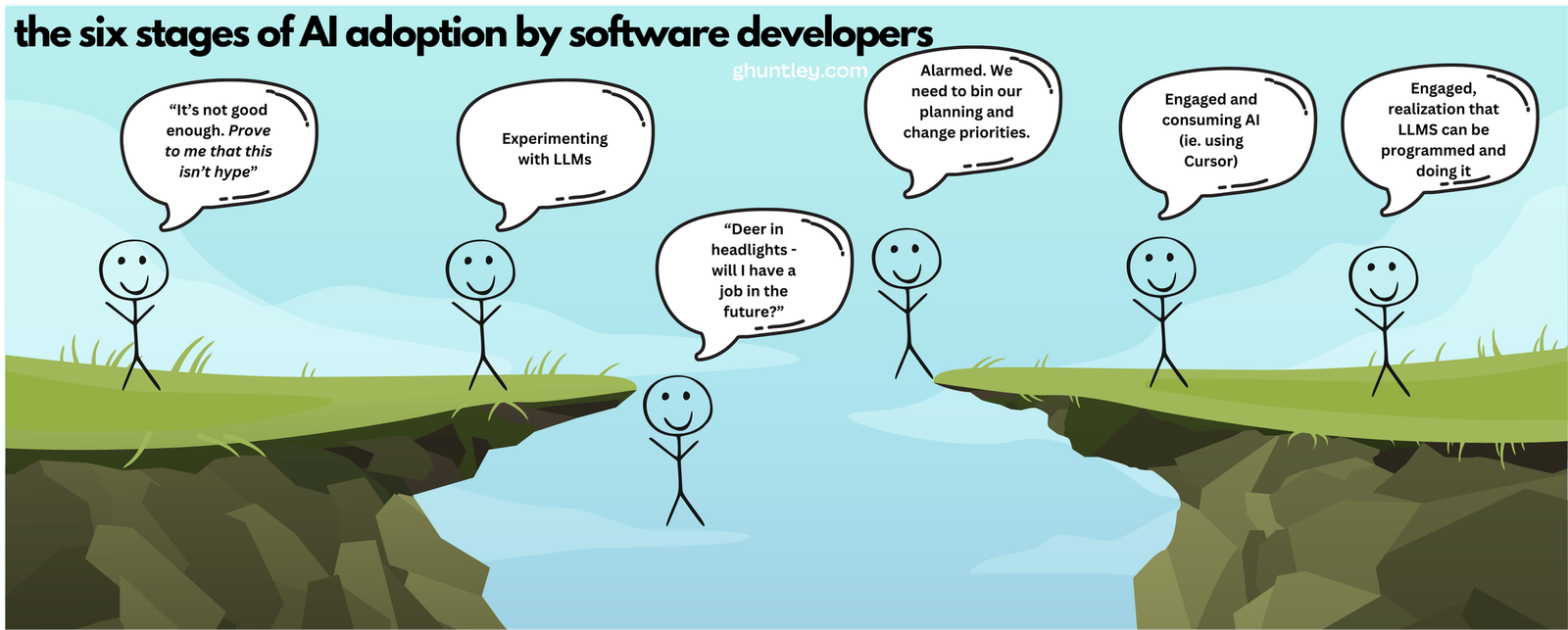

We rewind time to Christmas two years ago, where I originally posted, An "oh fuck" moment in time it was clear to me where this was going. The models were already good enough back then to cause societal disruption. The models were pretty wild; like wild horses, and they needed quite a great deal of skill to get outcomes from them...

If we fast-forward to the last Christmas holidays, many people had their "oh fuck" moment a year later, and the difference between now and then is twofold.

One: they actually picked up the guitar, played it, and took the Christmas period off because they had the space, capacity, and time to invest in themselves and make discoveries.

Two, the horses or models came with factory defaults of "broken in and ready to get shit done", which made them more accessible; they're easier to use to achieve outcomes, so people didn't need to invest as much time learning how to juice them to get disruptive outcomes.

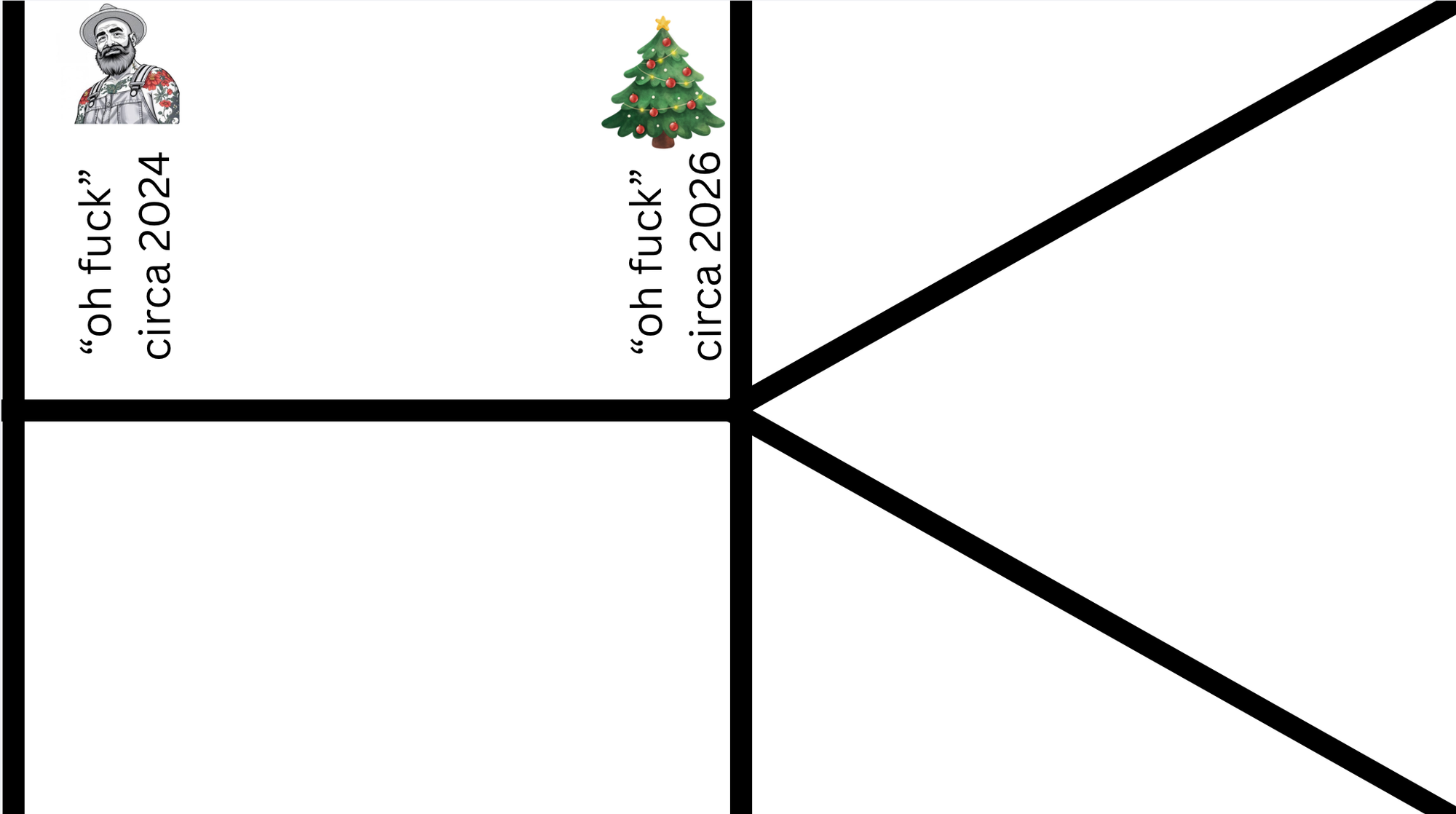

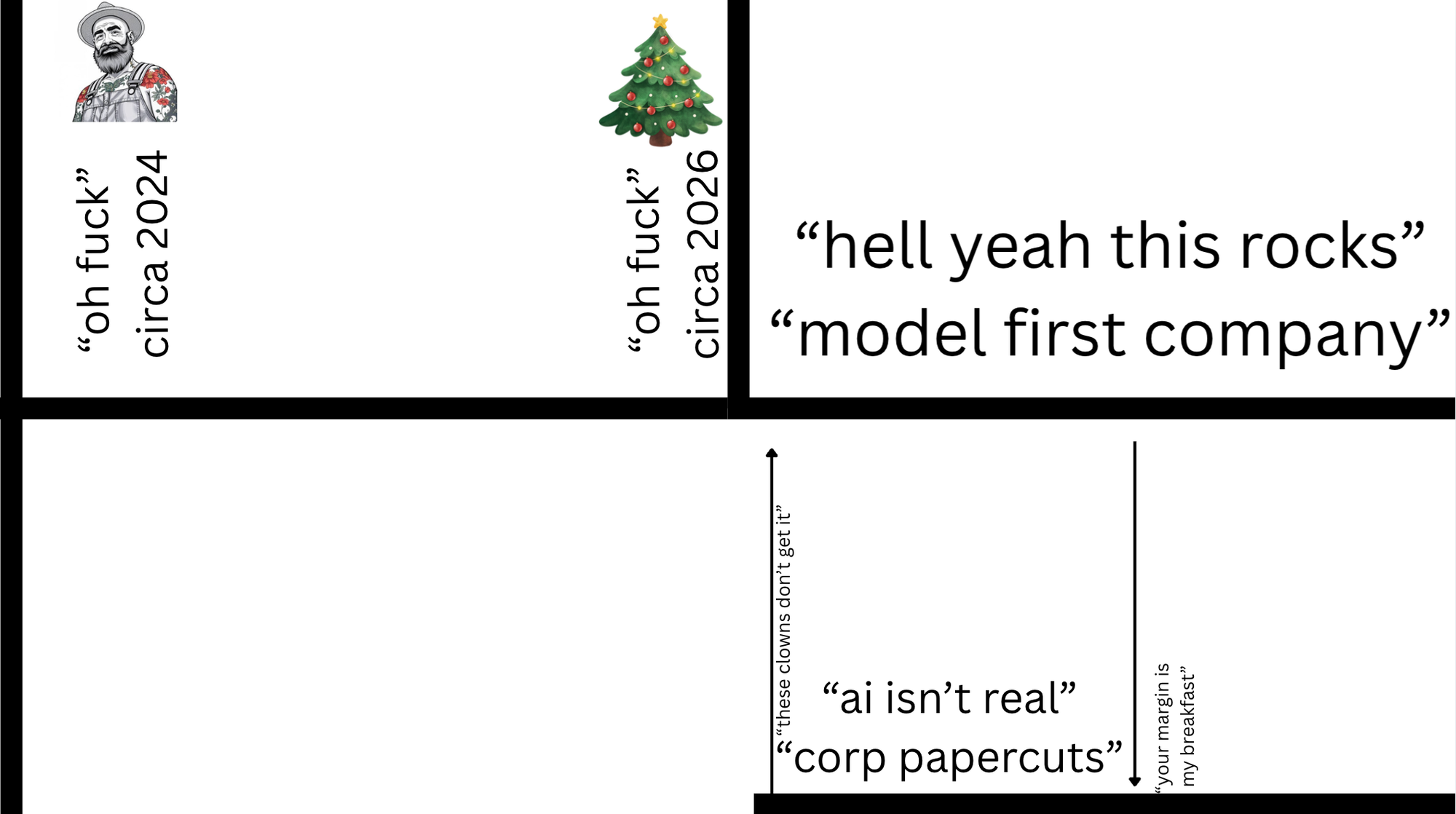

The world is now divided into two types of companies. Model first companies that are lean, apex predators who can operate on razor-thin margins and crush incumbents.

The next side of the equation is nearly every company out there today, which needs to go through a people transformation program, figure out what to do with AI, and deal with the fact that the fundamentals of business have changed.

Jack is doing the right thing for his company by acting early. What will happen is that the time for a competitor to be at your door will be measured in months, not years. And as models get better, the timeframe only compresses.

The real question is for the folks who, unfortunately, were laid off today; they will need jobs, and they will now see the importance of upskilling with AI. So they'll go on to their next employer or other industries and upskill with AI, and then seek to implement what is needed - automating job functions via AI.

Then the cycle continues across all industries, all disciplines.

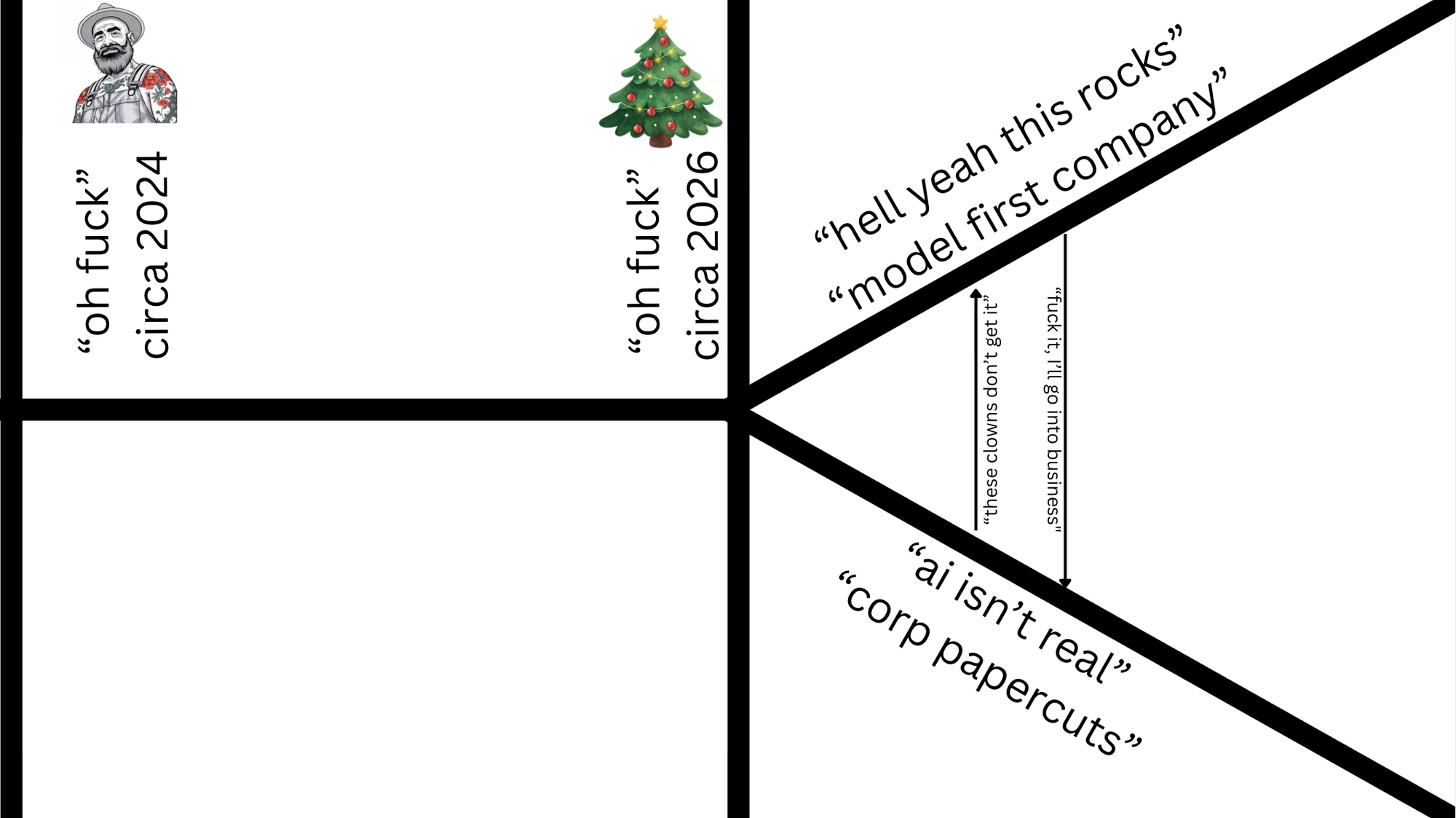

But it's not going to be just triggered by layoffs. It'll be just triggered by executives who don't get it. When you understand what is going on and how real AI is, it is maddening to be in a company surrounded by people who don't get it.

tfw when Canva's CTO puts you on full blast 😎

— geoff (@GeoffreyHuntley) June 29, 2025

[ps. that 50k LOC is on all endpoints and uplifts devs+non-devs usage of AI and is single source of truth for MCP at Canva] pic.twitter.com/E19pyd9meZ

You see, there is a difference between business suicide and employment suicide. The smart folks who don't want to commit employment suicide will leave.

I've never seen this before in my career: 28-30 year olds who refuse to use AI coding tools.

— Ivan Burazin (@ivanburazin) March 5, 2026

You show them what they can do augmented (not replaced) with AI and you see in their eyes that they have no damn clue of what's happening.

You can't work with these people anymore. Time…

The smarter ones in that segment will just go and found their own companies, then come back and do what they know. And they'll attack their employers vertically, operating leaner and meaner.

As the models get better, which is slope on slope derivative pace at this stage and as model-first companies get better and better and better at automating their job function, they can be at the door of their previous employer in months, not years.

To make matters worse, as the models get better, time gets compressed, and the snake eating its tail speeds up.

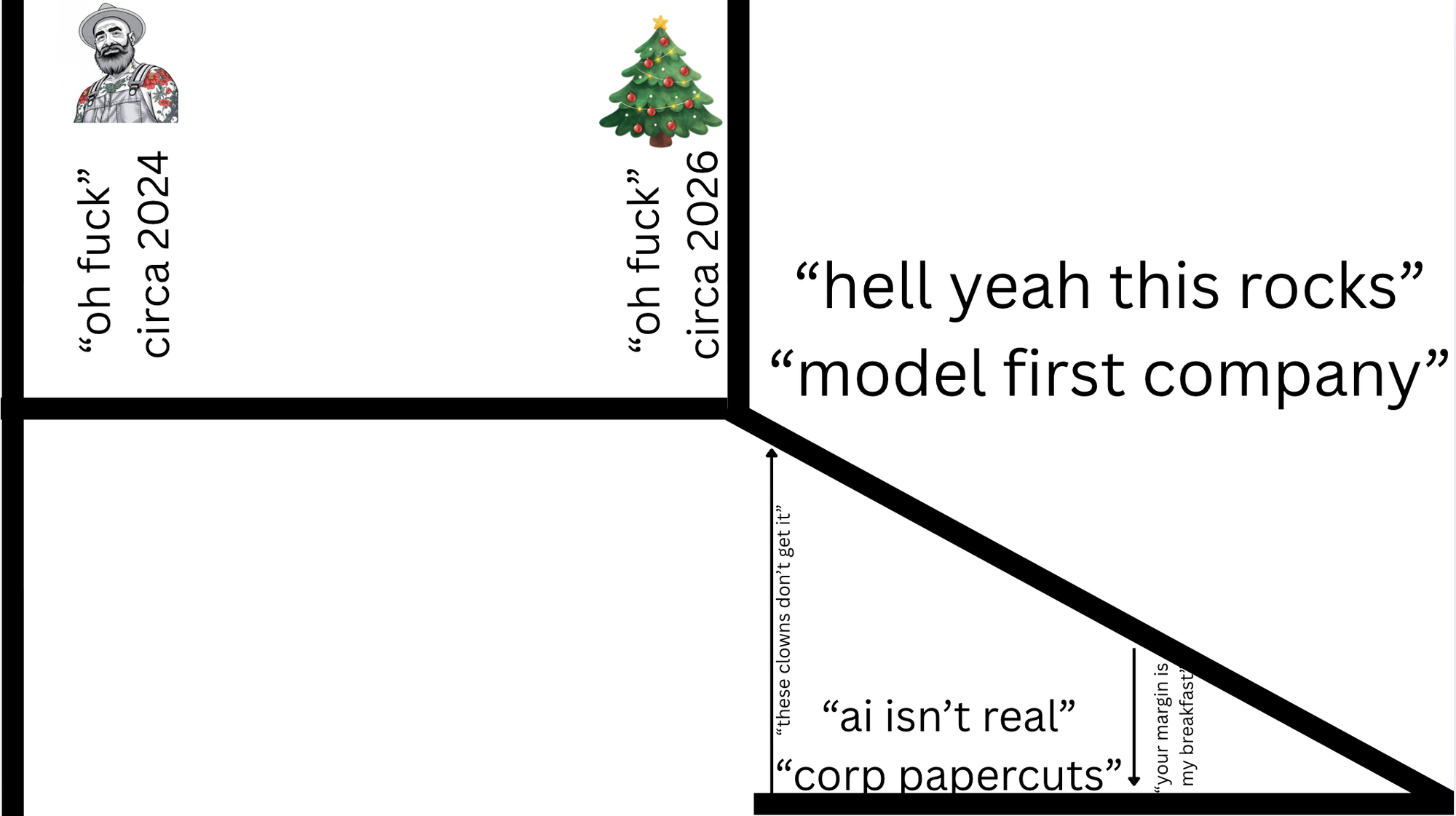

Which results in employers who did not take corrective actions, unlike Jack, having to lay off people in the long run because margins are being squeezed by new competitors operating leaner, meaner, and faster.

Then the cycle continues across all industries, all disciplines.

As I've been stressing in my writing for almost a year now, employers and employees trade time and skill for money. If a company is having problems adopting AI, then that is a company issue, not an employee issue.

Experience as a software engineer today doesn’t guarantee relevance tomorrow. The dynamics of employment are changing: employees trade time and skills for money, but employers’ expectations are evolving rapidly. Some companies are adapting faster than others.

Another thing I've been thinking: when someone says, “AI doesn’t work for me,” what do they mean? Are they referring to concerns related to AI in the workplace or personal experiments on greenfield projects that don't have these concerns?

This distinction matters.

Employees trade skills for employability, and failing to upskill in AI could jeopardise their future. I’m deeply concerned about this.

If a company struggles with AI adoption, that’s a solvable problem - it's now my literal job. But I worry more about employees.

In history, there are tales of employees departing companies that resisted cloud adoption to keep their skills competitive.

The same applies to AI. Companies that lag risk losing talent who prioritise skill relevance.

- June 2025 from https://ghuntley.com/six-month-recap/

Model weight first companies should be scaring the fuck out of every founder right now if they're not a utility service, for what is a moat now in the era when you can /z80 something?

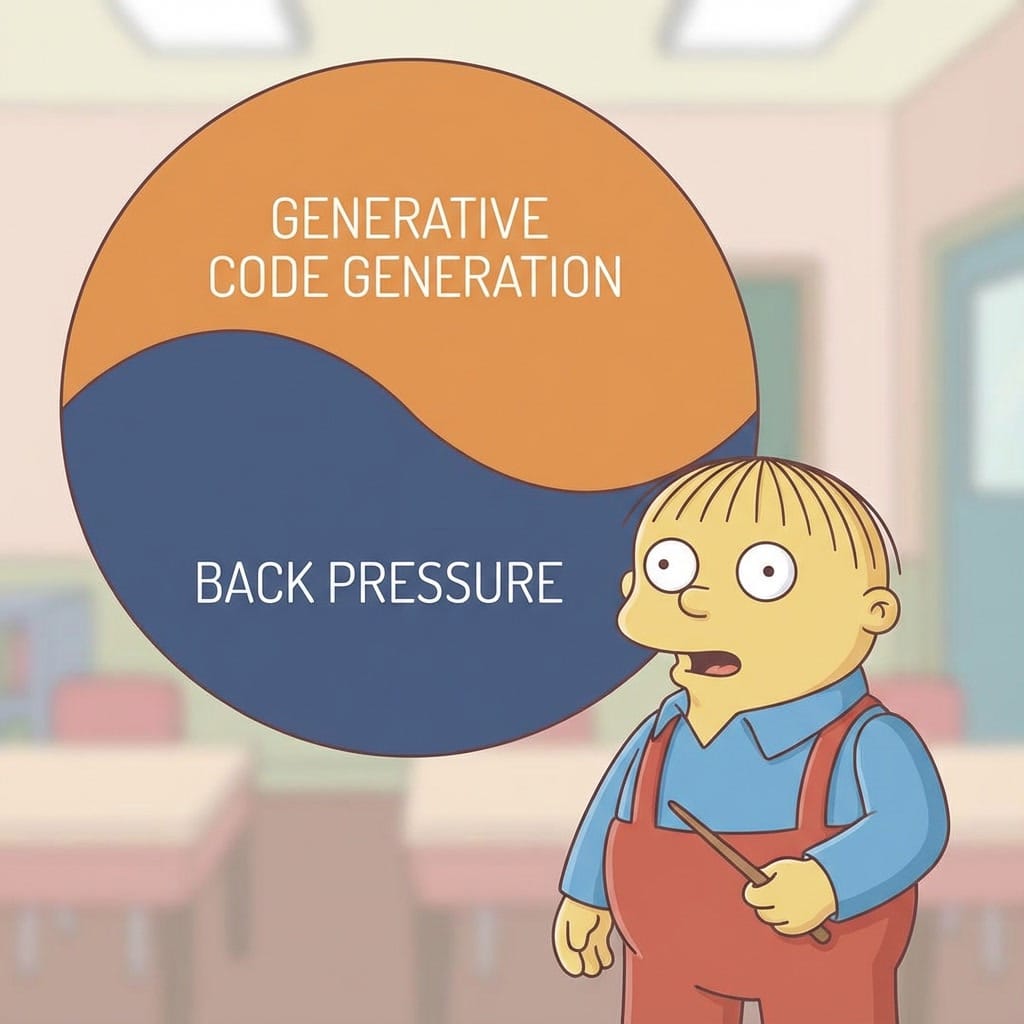

On the topic of moats, I've been thinking about this for almost a year now, and I think I've now got a clearer sense of what moats are in the AI era, but first, let's talk about what moats aren't...

- Any business model that's based on per-seat pricing, as AI starts to rip harder and harder, is going to become much harder to maintain headcount within a corporation because model-first companies will be coming into business and operating much leaner using utility-based pricing. It's a margin game now.

- Any product features or platforms that were designed for humans. I know that's going to sound really wild, but understand these days I go window-shopping on SaaS companies' websites for product features, rip a screenshot into Claude Code, and it rebuilds that product feature/platform. As we enter the era of hyper-personalised software, I think this will be the case more and more. In my latest creation, I have cloned Posthog, Jira, Pipedrive, and Calendly, and the list just keeps on growing because I want to build a hyper-personalised business that meets all my needs, with full control and everything first-party. I think we're going to see more and more of model first companies operating with this mindset.

- Any business thought that revolved around the high cost of switching from one technology to another, or migrations from one technology to another, was a form of lock-in. This is provably falsified now. It is so easy to rip a fart into Claude Code and migrate from one technology to another. Just last week, I migrated from Cloudflare D1 to a PlanetScale Postgres database automatically using a Ralph Loop, and it just worked. Full-on data migration. When have you ever heard of a database migration going successfully unattended? We're here now, folks.

If you currently work at a company that fits the top three bullet points, then understand that things are going to get really tight at your employer. I don't know when, but with certainty it will happen. Your best choices are either to find a new employer if the people around you don't get it, or, if there is a need and desire for automation, to lean so hard into AI, automate everything, and become the champion of AI within your company. If your company has banned AI outright, you need to depart right now and find another employer.

So with that out of the way, what is a moat?

- Distribution. Any form of distribution. Brand awareness. Steaks and handshakes.

- Utility-based pricing, similar to cloud infrastructure on a cents per megabyte or CPU hour.

- Operating as a model-first company and accelerating the transformation so you can operate under the principles below:

This is going to be a really hard time for a lot of people because identity functions have been erased, and the hard thing is, it's not just software developers. It's people managers as well. If your identity function is managing people, you need to make adjustments. You need to get back onto the tools ASAP.

Were smaller but effectively cut 2/3rds by telling board I wouldn’t backfill in May 2023. Best decision as got rid of all the people who “are sick of hearing about ai”. 20ish people now do about 30x the output of what having more than 60 did 3 years ago.

- an anonymous founder in my DMs today.

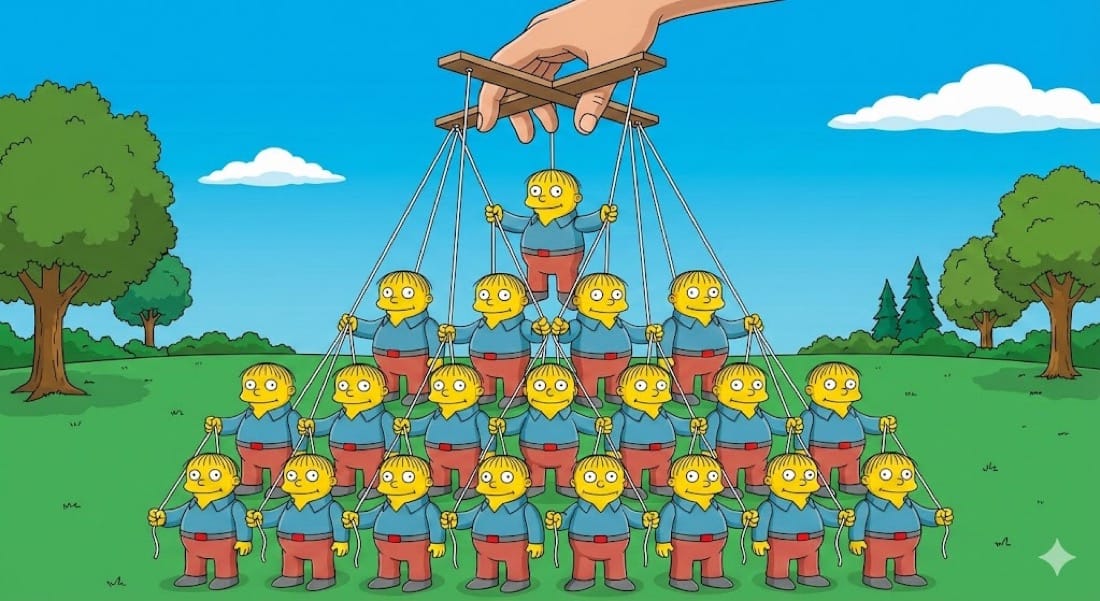

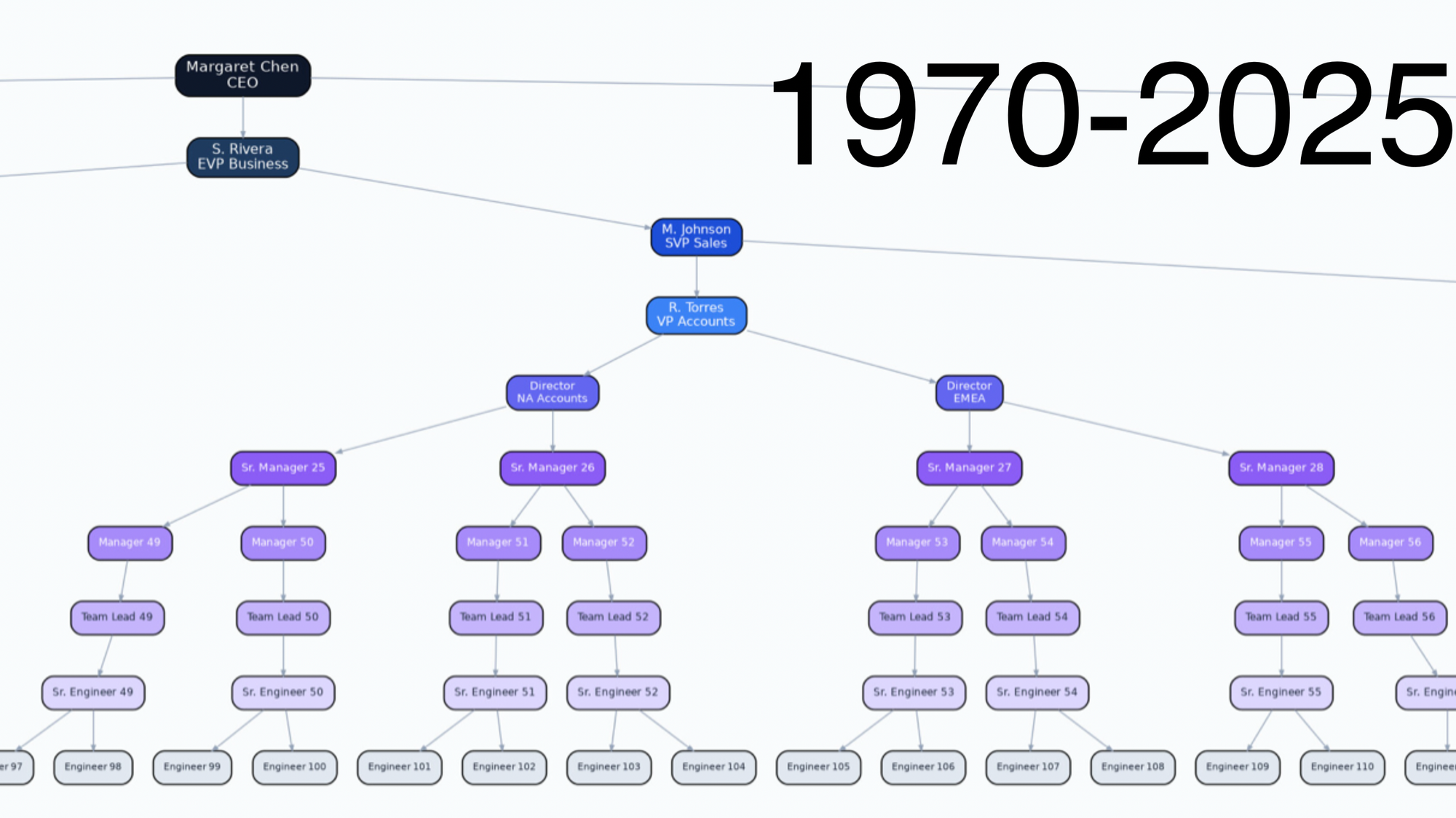

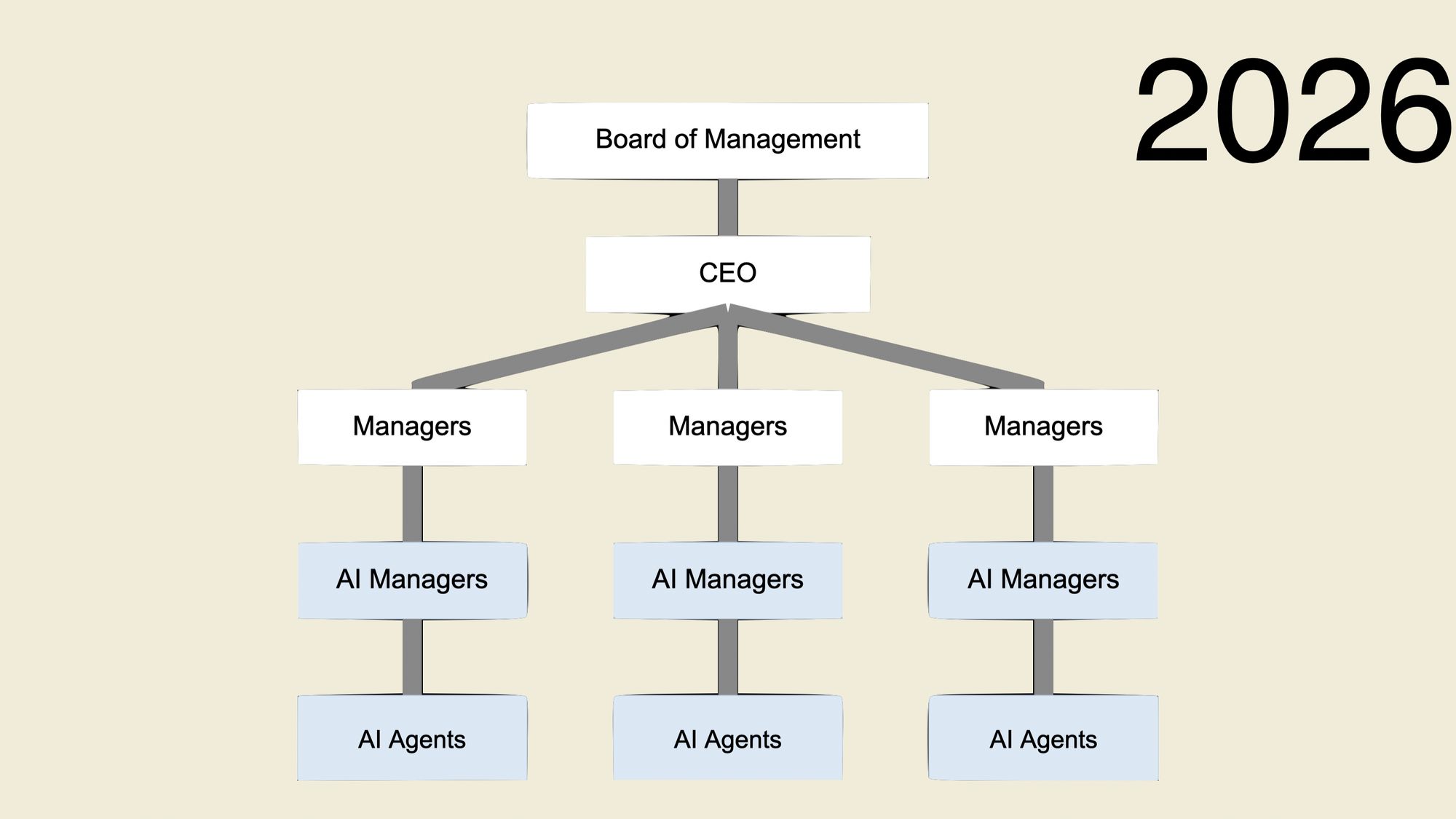

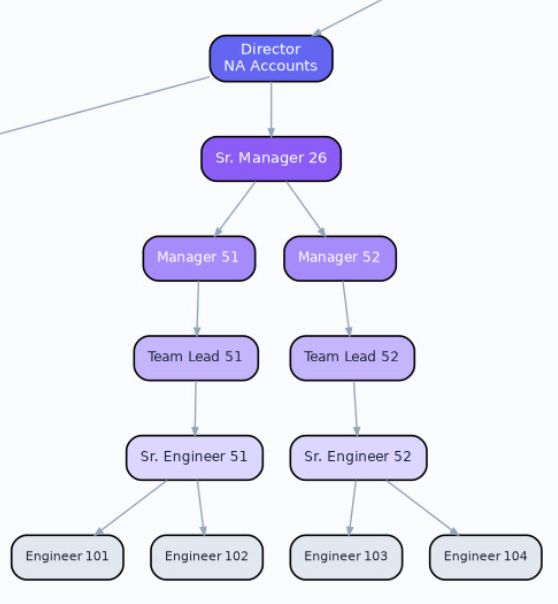

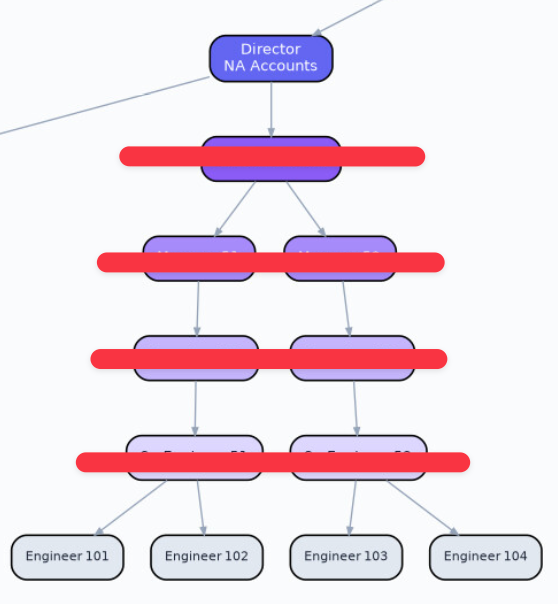

This transformation is going to be brutal. Organisations need to be designed differently and need to transform from this...

to this...

And one of the hardest things is that AI is being rammed into the world non-consentually. It's been pushed by employers and Silicon Valley. Yeah, it sucks, but you gotta pull your chin up, process those feelings and deal with it, but for others it's gonna be really, really rough. There are going to be people who have spent years of their lives doing Game of Thrones, social political stuff, to get to where they are within a company, and it will have been all for nothing.

In the org chart above, consider what the value of the senior engineer, the team lead, the manager and the senior manager in this brave new world is? How much time is spent doing Dilbert activities? What if you can flatten the org chart? If you were a founder, why wouldn't you?

This is what I've been fearing for a year. I could be wrong, I don't know. Anyone who says that they know for sure is selling horseshit. One thing is absolutely certain: things will change, and there's no going back. The unit economics of business have forever changed.

Wait so you're telling me

— Alex the Engineer (@AlexEngineerAI) March 1, 2026

Solo builders are running entire companies now with AI employees working 24/7

While traditional teams are still stuck in the "we need more headcount" meeting

And the solo person has better work-life balance?

How is this not the biggest story in tech…

Whether a company does layoffs really comes down to the quality of its leadership. If they're being lazy and don't have ambitious plans, they will need to lay off, because eventually the backlog will run dry, and everything will get automated.

This isn't me throwing shit at Jack. Like, literally, it's a cold, hard fact that you need fewer people to run a business now. So if you have too many people on your payroll, you need to make changes, but having said that, there will be ambitious founders and leaders who didn't overhire and understand that AI enables them to do anything, and they can do it today. They can make that five-year roadmap happen in a year and provide a backlog for all employees to work on while they utilise AI.

It's going to be really interesting to see how this pans out.

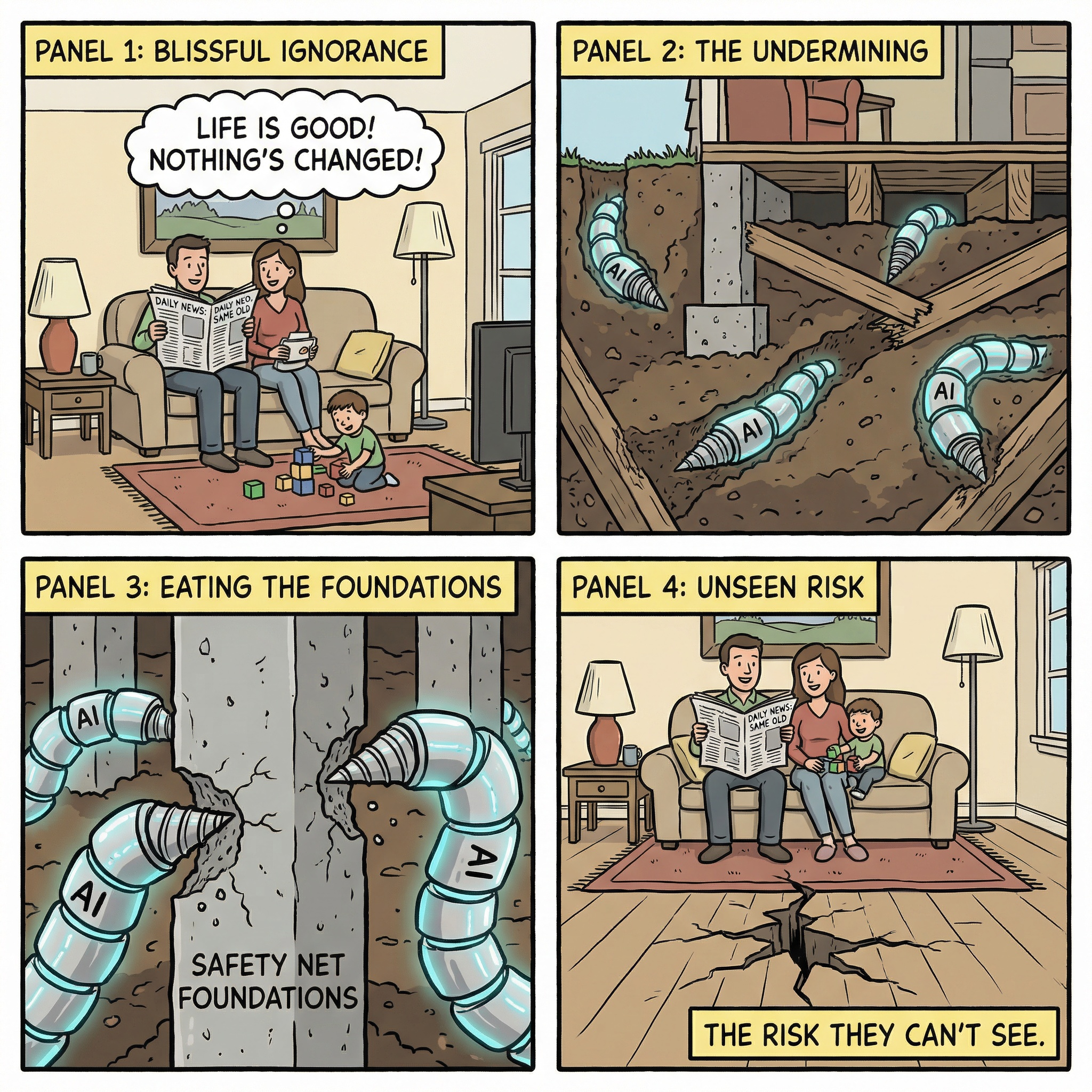

All I can ask you to do is tap someone else on the shoulder and stress to them to treat this topic seriously, upskill, and explain the risks going forward, and then ask them to do the same. You see, for a lot of people, they haven't noticed AI is knocking on their door because AI is burrowing under their house.